Research

Our visual system is constantly bombarded with a large amount of information from the environment, and how we intelligently select and accumulate these information to maintain a stable world representation is not understood. I aim to address this question using neuroscientific and psychophysical methods. I’m particularly interested in using deep learning models to identify the computational mechanisms (e.g., attention) required for producing intelligent visual behaviors (e.g., eye movements). I believe that understanding and developing a vision system that can mimic and predict human intelligent visual behaviors will benefit both science and applications, enhancing the interactivity and interpretability between human and computer vision. Currently, I’m exploring the use of generative models to explain human object-based perception and attention

Keywords: Vision, Attention, Eye-movements, Computational Modeling

Highlighted Projects and Publications:

for the most up-to-date and comprehensive list of publications, please visit my google scholar

Generated Object Reconstructions for Object-based Attention

Humans need to interact with objects, so evolution endowed us with a visual system that constantly attempts to reconstruct familar or meaningful objects; face on Mars. This project explores how and to what extent this top-down object reconstruction is functionally used for object recognition, grouping, and attention. We use a generative, object-centric approach to study this problem.

Humans need to interact with objects, so evolution endowed us with a visual system that constantly attempts to reconstruct familar or meaningful objects; face on Mars. This project explores how and to what extent this top-down object reconstruction is functionally used for object recognition, grouping, and attention. We use a generative, object-centric approach to study this problem.

Selected Publications:

- Ahn S, Adeli H, & ZelinskyG. (in press). The attentive reconstruction of objects facilitates robust object recognition. PLOS Computational Biology

- Ahn S, Adeli H, Zelinsky G. Reconstruction-guided attention improves the robustness and shape processing of neural networks. SVRHM at Neurips Workshops. 2022

- Adeli, H., Ahn, S., & Zelinsky, G. J. (2023). A brain-inspired object-based attention network for multiobject recognition and visual reasoning. Journal of Vision, 23(5), 16-16.

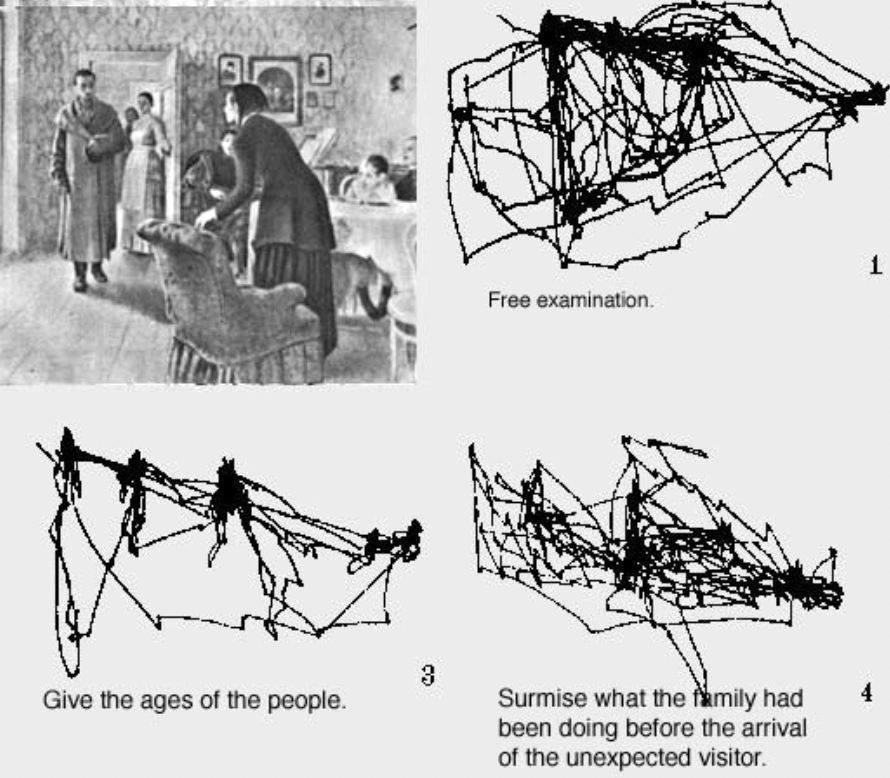

Decoding Cognitive States from Eye-Movements

Since Yarbus (1967), many studies have shown that eye movements provide a sensitive indicator of individual differences and the influence of top-down cognitive control on our visual processing. This project aims to extract meaningful features from eye movements and build computational models that can predict various cognitive states. These may include things like task demands, comprehension levels, memory performance, and even symptoms of certain psychiatric disorders.

Since Yarbus (1967), many studies have shown that eye movements provide a sensitive indicator of individual differences and the influence of top-down cognitive control on our visual processing. This project aims to extract meaningful features from eye movements and build computational models that can predict various cognitive states. These may include things like task demands, comprehension levels, memory performance, and even symptoms of certain psychiatric disorders.

Selected Publications:

- Ahn S, Kelton C, Balasubramanian A, Zelinsky G. Towards predicting reading comprehension from gaze behavior. ETRA. 2020

- Kelton C, Wei Z, Ahn S, Balasubramanian A, Das SR, Samaras D, Zelinsky G. Reading detection in realtime. ETRA. 2019

- Ahn S, Lee D, Hinojosa A, Koh S. Task Effects on Perceptual Span during Reading: Evidence from Eye Movements in Scanning, Proofreading, and Reading for Comprehension [under review]

Gaze Modeling and Prediction

This project aims to develop computational models that can predict human gaze trajectory during visual tasks, including free-viewing and visual search, by incorporating biologically plausible algorithms (e.g., reinforcement learning) and encoding constraints (e.g., foveated representation). The ultimate goal is to use this understanding of how humans allocate their spatial attention to develop next-generation systems that can intelligently anticipate a user's needs or desires.

This project aims to develop computational models that can predict human gaze trajectory during visual tasks, including free-viewing and visual search, by incorporating biologically plausible algorithms (e.g., reinforcement learning) and encoding constraints (e.g., foveated representation). The ultimate goal is to use this understanding of how humans allocate their spatial attention to develop next-generation systems that can intelligently anticipate a user's needs or desires.

Selected Publications:

- Mondal S, Yang Z, Ahn S, Samaras D, Zelinsky G, Hoai M. Gazeformer: Scalable, Effective and Fast Prediction of Goal-Directed Human Attention. CVPR. 2023

- Yang Z, Huang L, Chen Y, Wei Z, Ahn S, Zelinsky G, Samaras D, Hoai M. Predicting goal-directed human attention using inverse reinforcement learning. CVPR. 2020

- Zelinsky G, Yang Z, Huang L, Chen Y, Ahn S, Wei Z, Adeli H, Samaras D, Hoai M. Benchmarking gaze prediction for categorical visual search. CVPR Workshops. 2019